pytorch tutorial

tutorial 地址: pytorch: Training a Classifier.

当使用新的数据集进行测试时, 出现的问题及解决的方法.

Problem 1

error:

|

|

location:

|

|

solution:

|

|

Problem 2

error:

|

|

location:

|

|

solution:

|

|

Problem 3

error:

|

|

location:

|

|

solution:

|

|

Problem 4

error:

|

|

location:

|

|

solution:

|

|

其他

torchvision.datasets.ImageFolder()会自动加载标签信息.- 可以通过上述语句返回的对象调用

len(dataset)返回样本个数, 调用dataset.classes返回标签集合.

- 可以通过上述语句返回的对象调用

2018-10-30

pytorch中的可训练性设置

在代码中看到两种设置

|

|

字面意思都是不训练base_network, 但是两个训练的结果不同.

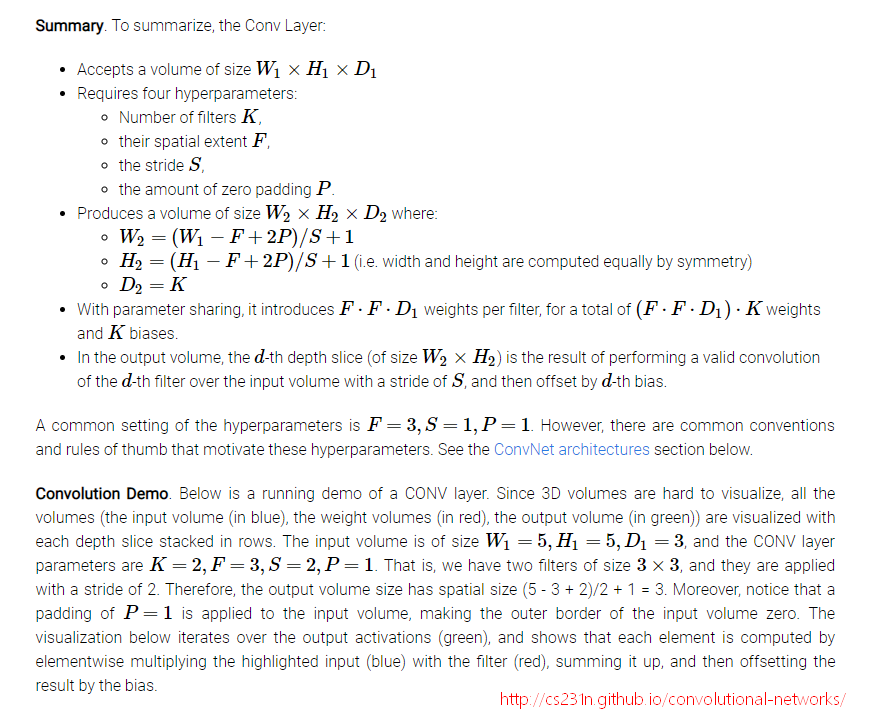

requires_grad

- 是pytorch中变量自动求导的一个属性[AUTOGRAD MECHANICS].

- 当设置为

False时, 反向传播时不使用梯度更新变量. - 他的作用是用来冻结模型中的部分(freeze part of your model).

Module.train(mode)

pytorch doc: Module.train(mode)

针对于特有模型的特有表现, 比如Dropout, BathNorm等模型中, 不是需要梯度更新的参数(Dropout: mean, std).

Even the parameters are the same, it doesn’t mean the inferences are the same.

For dropout, when

train(True), it does dropout; whentrain(False)it doesn’t do dropout (identitical output).And for batchnorm,

train(True)uses batch mean and batch var; andtrain(False)use running mean and running var. [link]For dropout (there’s even no parameter in dropout), the dropout position is changing when train is True. For BatchNorm, the

train(True)will use the batch norm instead ofrunning_meanandrunning_varand alsorunning_meanandrunning_varwill also change. [link]A layer doesn’t have

requires_grad, only Variables have.running_meanandrunning_varare buffers, and are updated during forwarding. I assumetrain(True)will still use the batch mean and batch var. [link]

如何固定预训练的ResNet

I am wondering whether to set

.eval()for those frozen layers since it may still update its running mean and running var. [link]Setting

.requires_grad = Falseshould work for convolution and FC layers. But how about networks that have instanceNormalization? Is setting.requires_grad = Falseenough for normalization layers too? [link]

当需要固定要预训练的ResNet, 相当于只做预测任务. 因此只需把模型的状态设置为.eval()即可.

|

|

refs:

- https://stackoverflow.com/a/48270921/6494418

- https://stackoverflow.com/questions/50233272/pytorch-forward-pass-changes-each-time

- https://discuss.pytorch.org/t/batchnorm-eval-cause-worst-result/15948/6

- https://github.com/bethgelab/foolbox/issues/74

- https://courses.cs.washington.edu/courses/cse490r/18wi/lecture_slides/02_16/pytorch-tutorial.py

2018-10-31

- 当数据为图片时, 并且图片的标签是按照文件夹表示的, 使用

torchvision.datasets.ImageFolder()读取数据后, 使用torch.utils.data.DataLoader()配置数据时, 一定要加入参数shuffle=True, 不然网络无法训练! 因为一个批量数据中可能就只有一个类别, 无法反向传播, 致使参数不下降, 或者为nan. - 若使用GPU进行训练, 在读取

DataLoader时, 把数据加载到GPU, 而不是在iteration时加入GPU, 将大大提升运行时间!

2018-11-13

tensor() 是不能直接和int, 等非tensor类型计算的, 计算结果会成0

2018-11-16

官网教程

pytorch是什么?

基于python的科学计算工具包:

- 基于GPU计算的numpy的替代物

- 深度学习研究平台

tensor

就是numpy的ndarray, 不同之处在于基于GPU的tensor能加速计算.

torch.Tensoris the central class of the package. If you set its attribute.requires_gradasTrue, it starts to track all operations on it. When you finish your computation you can call.backward()and have all the gradients computed automatically. The gradient for this tensor will be accumulated into.gradattribute.

- Tensor是核心的数据结构

.requires_grad用来追踪Tensor是否需要计算每个算子的梯度.backward()用来计算梯度

function

Tensor和Function是相互联系的, 构成了一个非循环图, 它编码了完整的计算历史.

autograd

pytorch中所有神经网络的核心是autograd.

gradient

反向传播(backprop)阶段, 损失是一个标量(scalar)

- 因为损失函数也是计算图中的一部分(最上层部分), 然后通过梯度分布在各个label上

neural networks

A typical training procedure for a neural network is as follows:

- Define the neural network that has some learnable parameters (or weights)

- Iterate over a dataset of inputs

- Process input through the network

- Compute the loss (how far is the output from being correct)

- Propagate gradients back into the network’s parameters

- Update the weights of the network, typically using a simple update rule:

weight = weight - learning_rate* gradient

define the networks

You just have to define the

forwardfunction, and thebackwardfunction (where gradients are computed) is automatically defined for you usingautograd. You can use any of the Tensor operations in theforwardfunction.

basic classes

Recap:

torch.Tensor- A multi-dimensional array with support for autograd operations likebackward(). Also holds the gradient w.r.t. the tensor.nn.Module- Neural network module. Convenient way of encapsulating parameters, with helpers for moving them to GPU, exporting, loading, etc.nn.Parameter- A kind of Tensor, that is automatically registered as a parameter when assigned as an attribute to aModule.autograd.Function- Implements forward and backward definitions of an autograd operation. EveryTensoroperation, creates at least a singleFunctionnode, that connects to functions that created aTensorand encodes its history.

backprop

To backpropagate the error all we have to do is to loss.backward(). You need to clear the existing gradients though, else gradients will be accumulated to existing gradients.

Now we shall call loss.backward(), and have a look at conv1’s bias gradients before and after the backward.

|

|

training a classifier

代码框架:

- loading and normalizing data

- define the neural network

- define loss function and optimizer

- train the network

- test the network

Github: pytorch-tutorial

2018-12-10

对于tensor.detach()的理解.

pytorch想做gpu加速版的numpy,取代numpy在python中科学计算的地位。

pytorch的python前端在竭力从语法、命名规则、函数功能上与numpy统一,加持的自动微分和gpu加速功能尽可能地在吸引更大范围内的python用户人群。

[Link]

因此, 在使用pytorch的时候, 仅需要注意自动微分就行了!

而tensor.detach()就是解决禁用自动微分的方法[Link].

- (与

tensor.clone()区别,tensor.clone()保持了源tensor的requires_grad)

简单理解, 就是把计算图中的一部分拆解下来, 而这部分不需要自动微分.

update

作用: 利用detach截断梯度流[Link]

-

返回一个新变量,与当前计算图分离。结果将永远不需要改变。

如果输入是易失的,输出也将变得不稳定。

返回的 Variable 永远不会需要梯度。

参考:

torch.Tensor.register_hook[link]

register_hook(hook)[SOURCE]Registers a backward hook.

The hook will be called every time a gradient with respect to the Tensor is computed. The hook should have the following signature:

1hook(grad) -> Tensor or NoneThe hook should not modify its argument, but it can optionally return a new gradient which will be used in place of

grad.This function returns a handle with a method

handle.remove()that removes the hook from the module.

登记一个钩子, 在反向传播是调用!

refs:

- https://discuss.pytorch.org/t/solved-reverse-gradients-in-backward-pass/3589

- https://discuss.pytorch.org/t/why-cant-i-see-grad-of-an-intermediate-variable/94

2018-12-13

- 比赛心得和pytorch(等)踩得坑[Link]

2018-12-14 20:35:33

在使用某个工具之前, 一定要先看看别人已经踩过那些坑.

比如说使用github上面的开源代码, 先要看看issue里面别人踩过的坑, 然后自己尽量避免, 或者早有准备.

知乎上有一个问题, 里面的回答也非常有建设性: PyTorch 有哪些坑/bug?

里面的一些回答也非常的不错, 比如:

2019-1-7 22:08:19

又找到一个不错的教程

https://github.com/chenyuntc/pytorch-book

作者陈云, 北邮的研究生, 著有<深度学习框架PyTorch:入门与实践>, 热爱分享, 知乎和github都有不错的干货.

2019-1-9 17:14:17

https://mp.weixin.qq.com/s/mPmFOm32-ipbiIp8mPSd-A

黄海广老师对官网1.0版本教程的翻译

2019-1-13 15:31:59

在XXXLoss的前面不要加softmax?

有些损失需要加, 有些损失已经包含了softmax的计算.

具体来讲

nn.BCELoss前面需要加nn.Sigmoid(), 并且输出一维向量nn.BCEWithLogitsLoss相当于(nn.Sigmoid()+nn.BCELoss), 因为损失函数包含了归一化nn.CrossEntropyLoss不需要加nn.Softmax(dim=1), 因为损失函数里面包含了归一化